Meet 'Chinese CLIP,' An Implementation of CLIP Pretrained on Large-Scale Chinese Datasets with Contrastive Learning - MarkTechPost

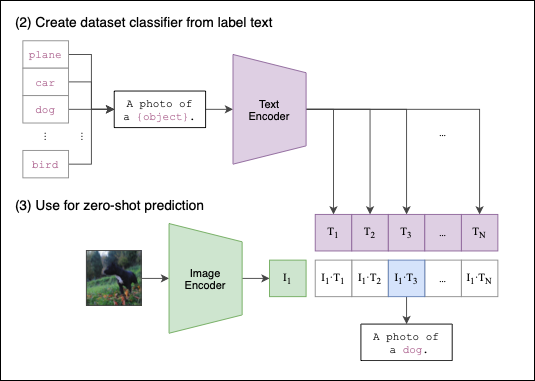

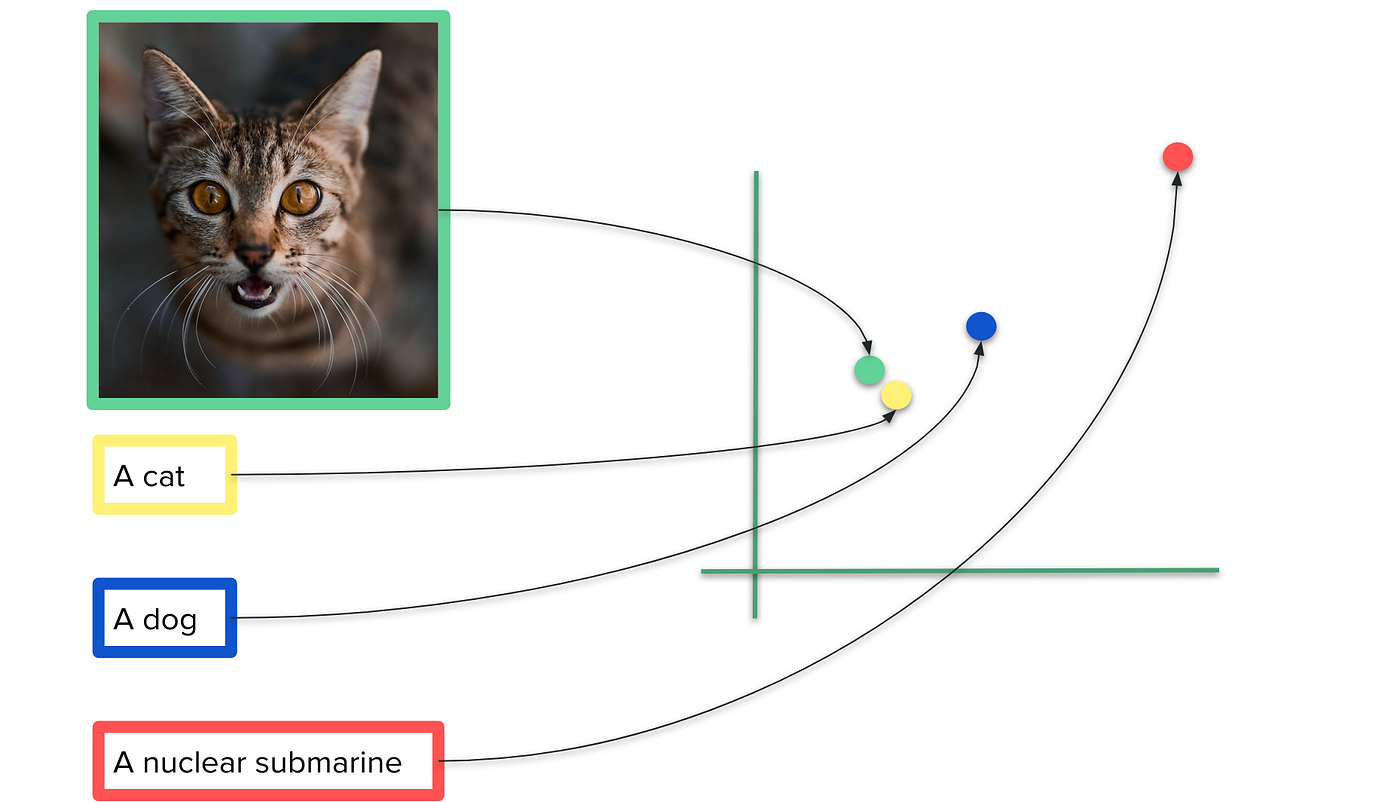

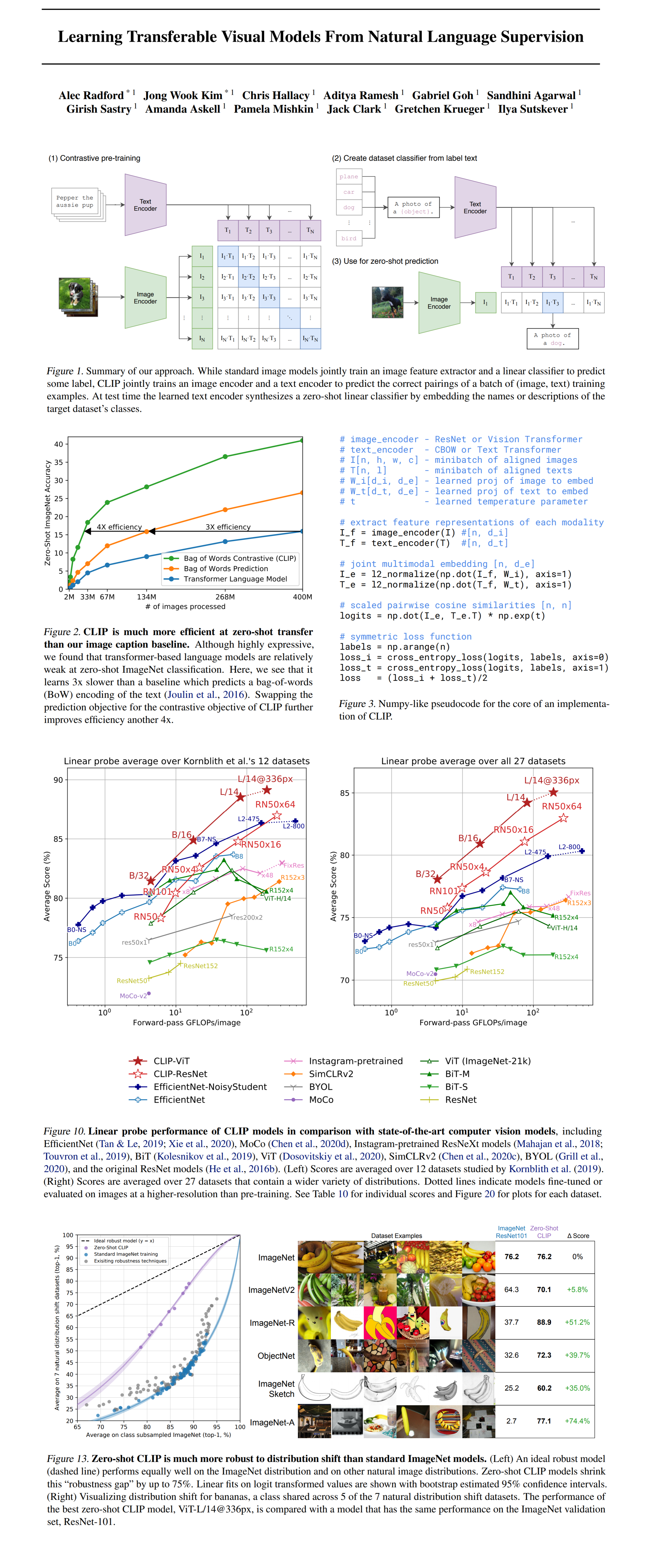

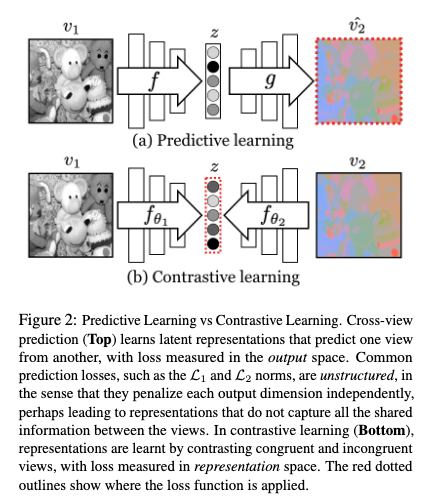

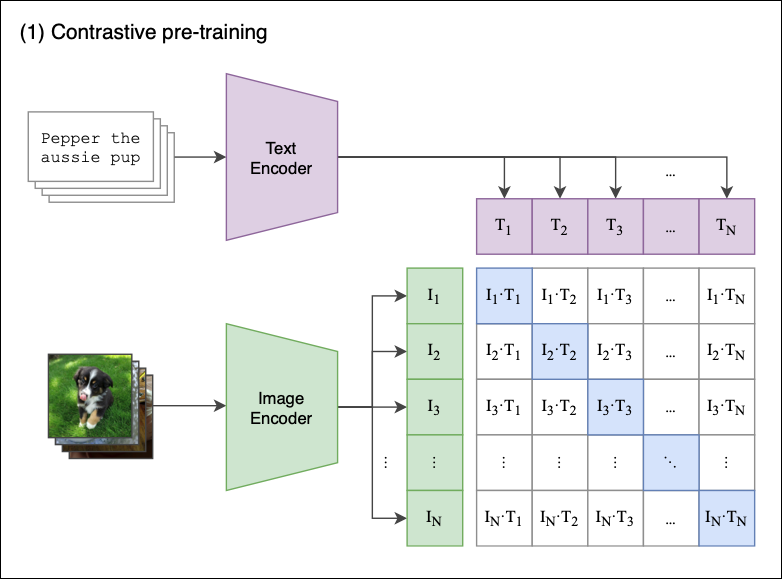

Understand CLIP (Contrastive Language-Image Pre-Training) — Visual Models from NLP | by mithil shah | Medium

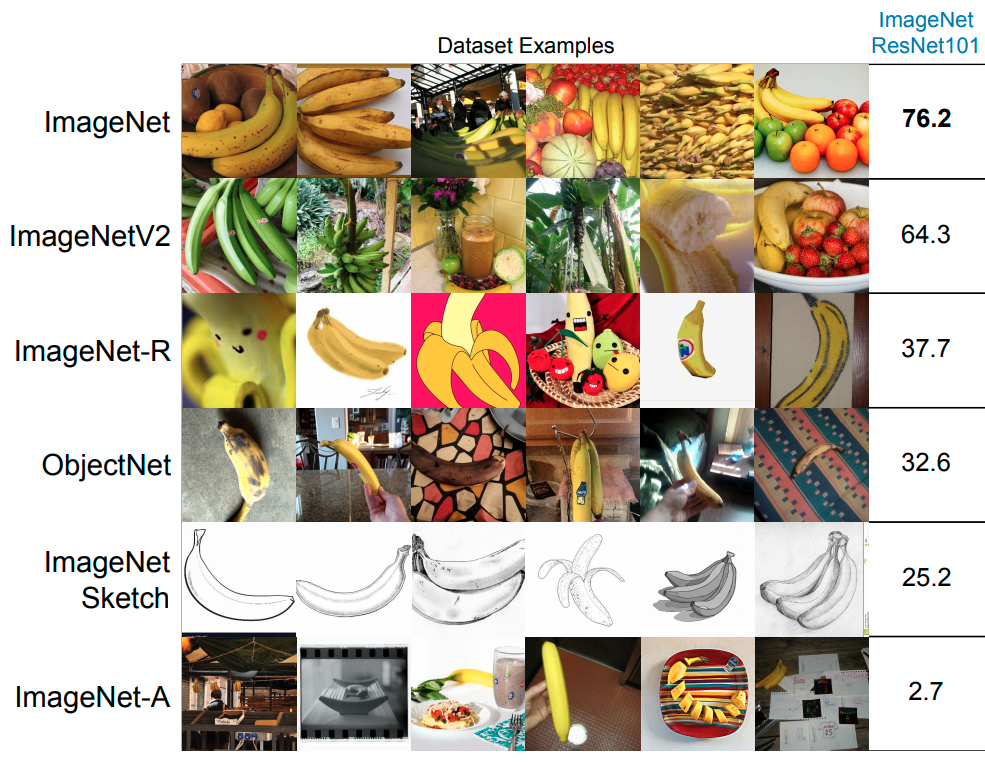

CLIP: The Most Influential AI Model From OpenAI — And How To Use It | by Nikos Kafritsas | Towards Data Science

Review — CLIP: Learning Transferable Visual Models From Natural Language Supervision | by Sik-Ho Tsang | Medium

PDF) LAION-400M: Open Dataset of CLIP-Filtered 400 Million Image-Text Pairs | Romain Beaumont - Academia.edu

GitHub - openai/CLIP: CLIP (Contrastive Language-Image Pretraining), Predict the most relevant text snippet given an image